Understanding the key differences between latency vs throughput vs bandwidth is essential for anyone working in computer networking, telecommunications, cloud computing, or related fields. While these terms are often used interchangeably, they actually refer to distinct metrics that measure different aspects of a network’s performance.

In this article, you will learn how latency, throughput, and bandwidth differ in computer networking. Compare definitions, metrics, factors, and optimization of these key concepts for managing network performance.

Key Takeaways

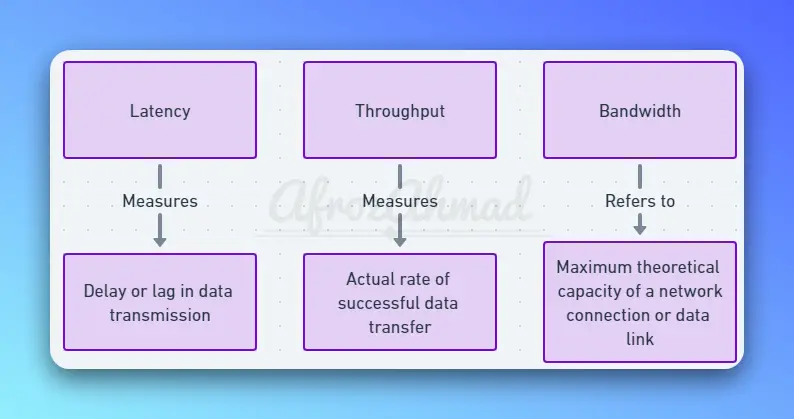

- Latency measures the delay or lag in data transmission, while throughput measures the actual rate of successful data transfer.

- Bandwidth refers to the maximum theoretical capacity of a network connection or data link.

- Lower latency results in more responsive applications and better interactivity. Higher throughput allows faster data transfers. More bandwidth enables support for more concurrent users and higher data volumes.

- Though related, optimizing one metric does not necessarily improve the others. A balanced approach is required to optimize overall network performance.

- Latency and throughput have an inverse relationship – higher latency negatively impacts throughput. But higher bandwidth does not directly reduce latency or guarantee higher throughput.

- Monitoring tools, diagnostics, and testing methods can provide visibility into latency, throughput, and bandwidth to identify bottlenecks.

- New technologies, protocols, network upgrades, and techniques like caching and compression can help optimize these metrics.

- Understanding the differences between latency, throughput, and bandwidth is key for network engineers, cloud architects, application developers, and others working to deliver responsive, high-speed services.

You may also want to learn below networking topics.

- What is ARP, RARP, Proxy ARP, and GARP

- Comparing Ethernet Cables – Cat5 vs Cat5e vs Cat6 vs Cat6a vs Cat7 vs Cat8

- Megabit vs Megabyte: Understanding the Differences

What is Latency?

Latency measures the time it takes for data to get from one designated point to another. It is typically measured in milliseconds (ms).

Specifically, latency refers to the delay between when a packet is sent from a source device and when it is received at the destination. Some key examples include:

- Network latency – The time for a packet to travel from a source computer to a destination computer across a network. This includes delays introduced by network equipment like routers and switches.

- Storage latency – The time for a block of data to be read from or written to a storage device like a hard disk or SSD.

- Display latency – The time for an output device, like a monitor, to show a change after receiving new data from the graphics card.

Lower latency is always desirable. Latency directly impacts the responsiveness of networked applications. If latency is high, applications will seem sluggish as users wait for network requests to complete. Latency is often a “silent killer” of application performance.

Factors that contribute to high latency include:

- Physical distance and number of hops between source and destination

- Network congestion and queuing delays

- Slow network equipment

- Time to process protocols like TCP/IP

- Disk seeks time for storage access

See also: https://afrozahmad.com/blog/what-are-proxies-in-networking-what-are-proxies-used-for/

What is Throughput?

Throughput measures the amount of data successfully transferred between two points over a given time period. It is typically measured in bits or bytes per second (bps/Bps).

Throughput indicates the rate of successful data transfer across a network or channel. For example:

- Network throughput – The rate of successful message delivery over a network. This is affected by bandwidth, latency, error rate, and protocol overhead.

- Disk throughput – The rate data can be read from or written to a disk, like MB/s. This depends on disk speed, access patterns, queues, and caches.

- System throughput – The overall rate at which a computer system processes data. This depends on resources like CPU, memory, storage, and network.

Higher throughput is almost always better. It means more data is being transferred successfully. Applications can often compensate for high latency by using high throughput to complete transfers faster.

Factors that limit throughput include:

- Insufficient bandwidth

- High error rates requiring retransmission

- Network congestion and contention

- Low disk I/O speed

- Resource bottlenecks like slow CPU or memory

What is Bandwidth?

Bandwidth refers to the maximum theoretical data transfer rate of a network or data connection. It measures the potential throughput capacity.

Bandwidth is typically expressed as a bitrate in bits per second (bps) or bytes per second (Bps). Some key examples:

- Network bandwidth – The maximum speed of a network link or connection. Common wired networks like Ethernet operate at bandwidths like 10 Mbps, 100 Mbps, 1 Gbps. Wireless bandwidth depends on technology, spectrum, and channel width.

- Data plan bandwidth – The maximum download/upload speed permitted by an ISP. Mobile plans often have bandwidth caps like 10GB/month.

- Bus bandwidth – The throughput capacity of a bus like PCIe, determined by interface width and clock speed.

Higher bandwidth does not automatically mean higher throughput. Throughput is limited by the slower of bandwidth and latency. For example, a 1 Gbps network with 500 ms round trip time will have a much lower throughput than a 100 Mbps network with 5 ms round trip time.

Factors that determine available bandwidth include:

- Network medium – Wired, fiber optics, wireless spectrum

- Interface and technology – Ethernet, WiFi standards, LTE, etc

- Allocated channels/frequency bands

- Noise, interference

Now that we’ve clearly defined latency, throughput, and bandwidth, let’s compare them head-to-head.

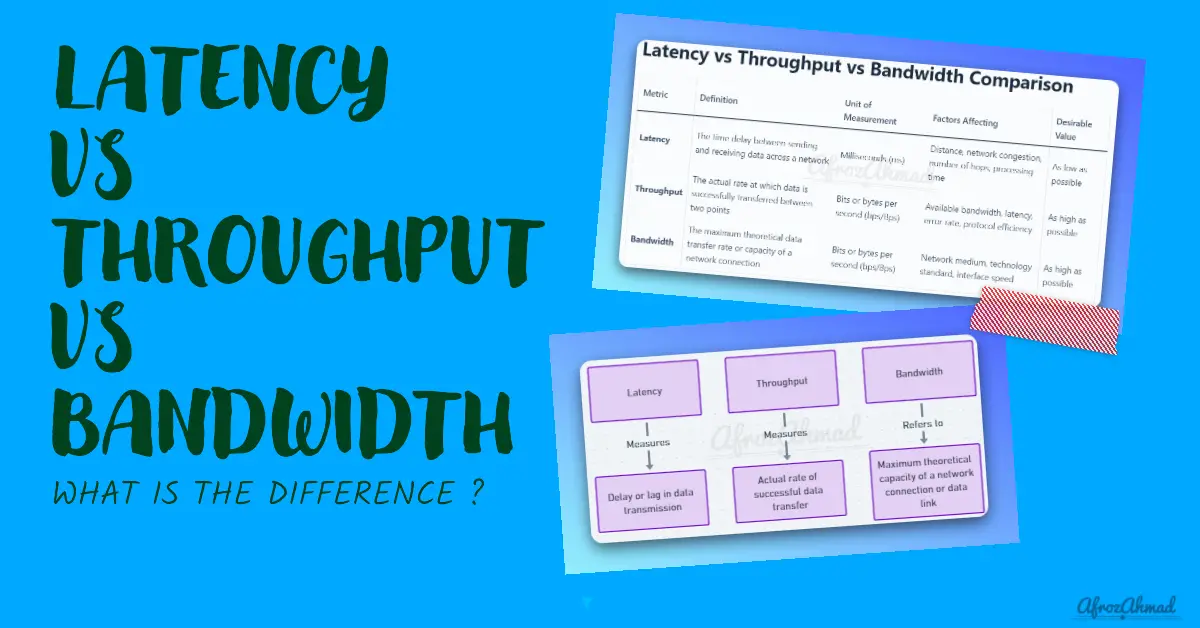

Latency vs Throughput vs Bandwidth Comparison

| Metric | Definition | Unit of Measurement | Factors Affecting | Desirable Value |

|---|---|---|---|---|

| Latency | The time delay between sending and receiving data across a network | Milliseconds (ms) | Distance, network congestion, number of hops, processing time | As low as possible |

| Throughput | The actual rate at which data is successfully transferred between two points | Bits or bytes per second (bps/Bps) | Available bandwidth, latency, error rate, protocol efficiency | As high as possible |

| Bandwidth | The maximum theoretical data transfer rate or capacity of a network connection | Bits or bytes per second (bps/Bps) | Network medium, technology standard, interface speed | As high as possible |

Key Differences:

- Latency indicates delay, throughput measures the actual data transfer rate, and bandwidth specifies maximum capacity.

- Optimizing latency reduces delays, improving throughput increases transfer speed, and adding bandwidth enhances capacity.

- Latency and throughput have an inverse relationship – higher latency negatively impacts throughput.

- Throughput cannot exceed bandwidth, but higher bandwidth alone does not reduce latency or guarantee higher throughput.

- Applications may require balancing tradeoffs between latency, throughput, and bandwidth based on their usage.

- Monitoring and measuring each metric provides insight into network performance and bottlenecks.

The Relationship Between Latency, Throughput, and Bandwidth

Latency, throughput, and bandwidth influence each other:

- Latency directly impacts throughput. Higher latency reduces throughput.

- Throughput cannot exceed available bandwidth.

- High-bandwidth applications like video streaming also require low latency for smooth delivery.

Understanding their interdependency is key to boosting overall network speed and responsiveness.

Why Latency, Throughput, and Bandwidth Matter

Latency, throughput, and bandwidth play pivotal roles in user experience and network efficiency:

Latency Impacts

- Responsiveness of applications

- Delays and lags during use

- Ability to support real-time services

Throughput Effects

- Speed of data transfers

- Congestion and bottlenecks

- Capacity to handle concurrent users

Bandwidth Effects

- Number of devices supported

- Volume of data transmitted

- Access to bandwidth-heavy apps

Factors Influencing Latency

Elements that contribute to latency include:

- Physical distance and propagation delay

- Network congestion and queuing

- Routing path and number of hops

- Processing overhead at nodes

- Retransmissions from errors or loss

- Protocol handshake delays

Latency accumulates at each step. Optimizing routing and reducing hops is key.

Factors Affecting Throughput

Throughput is influenced by:

- Available bandwidth

- Transmission technology and medium

- Network overheads and protocol efficiency

- Errors, collisions, and retransmissions

- Congestion and contention

- End-to-end distance

Higher throughput arises from efficient protocols, adequate bandwidth, and low-latency connections.

Elements Impacting Bandwidth

Bandwidth capacity depends on:

- A transmission medium, spectrum, or channel

- Network hardware interfaces and infrastructure

- The frequency range for wireless networks

- Distance covered and interference.

- Overhead from encoding, headers, etc.

Upgrading cables, WiFi standards, cellular generations, etc. boosts bandwidth.

Optimizing Latency

Methods to reduce latency include:

- Caching and content delivery networks (CDNs)

- Data compression and reduced payload size

- Fewer network hops through optimal routing

- Reducing overhead through efficient protocols

- Parallel connections via multithreading

- Load balancing across multiple servers

Improving Throughput

Strategies for better throughput:

- Increasing bandwidth availability where possible

- Scaling compute and network resources

- Leveraging multicore processors

- Traffic shaping and QoS prioritization

- Choosing efficient network protocols

- Minimizing retransmissions and errors

- Load balancing and horizontal scaling

Maximizing Bandwidth

To maximize bandwidth usage:

- Upgrade to the latest generation network hardware.

- Use high-capacity fiber/copper cables and advanced WiFi/cellular standards

- Limit distance, interference for wireless networks

- Employ quality of service (QoS) and traffic shaping

- Enable compression and caching

- Configure ports and channels optimally

- Monitor utilization to identify bottlenecks

Network Protocols and Technologies

Protocols and technologies that influence latency, throughput, and bandwidth include:

- TCP tuning for throughput via window scaling, selective acknowledgments

- QUIC and UDP for low-latency applications

- MPLS for low latency and reliability

- SD-WAN for dynamic routing optimization

- DNS caching to reduce latency

- QoS and traffic shaping for bandwidth optimization

- LTE/5G for high-bandwidth wireless

- Fiber optics for high throughput and low latency

Design Trade-Offs

Optimizing network performance involves trade-offs:

- Reducing latency can lower throughput in some cases

- Increasing throughput may require greater bandwidth

- Higher bandwidth may necessitate network upgrades impacting latency

Analyzing requirements and testing options is key to balancing trade-offs.

Addressing Common Misconceptions

Some common misconceptions related to these concepts:

- Latency and throughput aren’t interchangeable. Throughput depends on latency but also other factors.

- High bandwidth doesn’t automatically increase throughput if the network medium can’t support it.

- Boosting one metric doesn’t necessarily improve others. A comprehensive approach is required.

Monitoring, Measurement Tools, and Best Practices

Continuously monitoring these metrics is crucial:

- Use network monitoring tools like SolarWinds, PRTG, and Cisco Prime for visibility into latency, throughput, and bandwidth.

- Conduct active monitoring with tools like Ping, iPerf, traceroute, and Pathping to measure latency and throughput.

- Capture traffic with packet analyzers like Wireshark and tcpdump for diagnostics.

- Load test networks using tools like Loader.io, ApacheBench, and NGrinder to benchmark metrics under demand.

- Compare measurements before and after infrastructure changes to quantify the impact.

- Configure alerts when latency, throughput, or bandwidth degrade below thresholds.

Key best practices include:

- Establish internal SLAs and objectives for latency, throughput, and bandwidth.

- Prioritize latency, throughput, and bandwidth equally – don’t overoptimize one at the expense of others.

- Implement QoS and traffic shaping to appropriately balance different traffic types.

- Evaluate tradeoffs when tuning network performance – changing one metric may impact another.

- Leverage new technologies like 5G, WiFi 6, and DOCSIS 3.1 to enhance bandwidth.

- Choose protocols like QUIC and HTTP/3 for low latency requirements.

- Scale network capacity incrementally via additional interfaces, links, and hardware.

- Utilize CDNs and caching proxies to reduce latency for content delivery.

- Continuously measure user experience to detect issues proactively.

Real-World Examples

Let’s examine how latency, throughput, and bandwidth affect two common use cases: media streaming and web browsing.

Media Streaming

When streaming video or audio over the internet from services like YouTube, Netflix, Spotify, etc., all three metrics play important roles:

- Latency determines load times and lag. Buffering is needed to deal with latency before playback can begin.

- Throughput determines the media quality. Higher throughput enables streaming at higher resolutions and bitrates.

- Bandwidth determines maximum resolution/bitrate options. More bandwidth enables higher-quality streaming.

To optimize streaming, networks need sufficient bandwidth to deliver the content, high enough throughput to sustain the stream, and low enough latency to minimize buffering. Slow internet connections with insufficient bandwidth or high latency will result in constant buffering and lower video quality.

Web Browsing

Web browsing relies on several small HTTP requests loading elements like text, images, scripts, styles, etc. Here’s how the metrics impact performance:

- Latency determines page load time. Higher latency means longer wait times for pages to become interactive.

- Throughput determines the transfer speed of page elements; with higher throughput, pages load faster.

- Bandwidth allows more simultaneous connections. More bandwidth enables faster parallel downloads.

To optimize web browsing, networks need enough bandwidth to enable many simultaneous connections, high throughput to transfer page elements fast, and low latency for interactivity. Slow networks with high latency result in sluggish page loads.

As you can see, understanding latency vs throughput vs bandwidth helps explain common internet performance issues. Tweaking these metrics also helps optimize applications for speed.

Online Gaming

Low latency enables real-time interactivity. High throughput reduces lag and delays. Sufficient bandwidth allows HD video and concurrent users.

Video Streaming

High bandwidth and throughput enable HD video delivery. Low latency prevents buffering and stuttering.

Cloud Computing

Low latency facilitates access to cloud resources. High throughput enables fast data transfers. High bandwidth allows scaling cloud usage.

High-Frequency Trading

Ultra-low latency enables rapid automated trades. High throughput facilitates high volumes of transactions.

Internet of Things (IoT)

Low latency allows real-time control and telemetry. Throughput enables large data transfers from sensors and devices. High bandwidth accommodates massive scale.

Conclusion

Latency, throughput, and bandwidth represent the foundational metrics of network performance. While overlapping, these concepts have distinct definitions and purposes. Latency measures delay, throughput tracks actual data transfers, and bandwidth determines maximum capacity.

Optimizing these elements in unison is essential for responsive networks and applications. By leveraging protocols, technologies, monitoring tools, and capacity planning focused on latency, throughput, and bandwidth, modern networks can offer seamless and speedy user experiences. A nuanced understanding of their differences and relationship enables better design decisions and performance troubleshooting.

Frequently Asked Questions (FAQs)

What is more important latency or throughput?

Latency and throughput are both important depending on the use case. Latency is critical for real-time applications, while throughput is key for large data transfers.

What is latency vs bandwidth vs throughput?

Latency measures delay, bandwidth measures capacity, and throughput measures transfer rate. Latency is affected by distance and hops, bandwidth by network capacity, and throughput by effective utilization of bandwidth.

Is latency the inverse of throughput?

Latency and throughput are related but not exact inverses. Higher latency generally leads to lower throughput but their relationship also depends on other factors like bandwidth.

How much does latency affect throughput?

Latency significantly affects throughput. Higher latency reduces effective throughput due to increased delays in waiting for data transmission. However, extremely high bandwidth can compensate for high latency.

- Telecom Network Infrastructure: Complete Guide to Components & Design - January 6, 2026

- TP-Link TL-SG108E vs Netgear GS308E: Budget Smart Switches - January 5, 2026

- MikroTik CRS305-1G-4S+ Review: The Ultimate Budget SFP+ Switch Guide - December 25, 2025