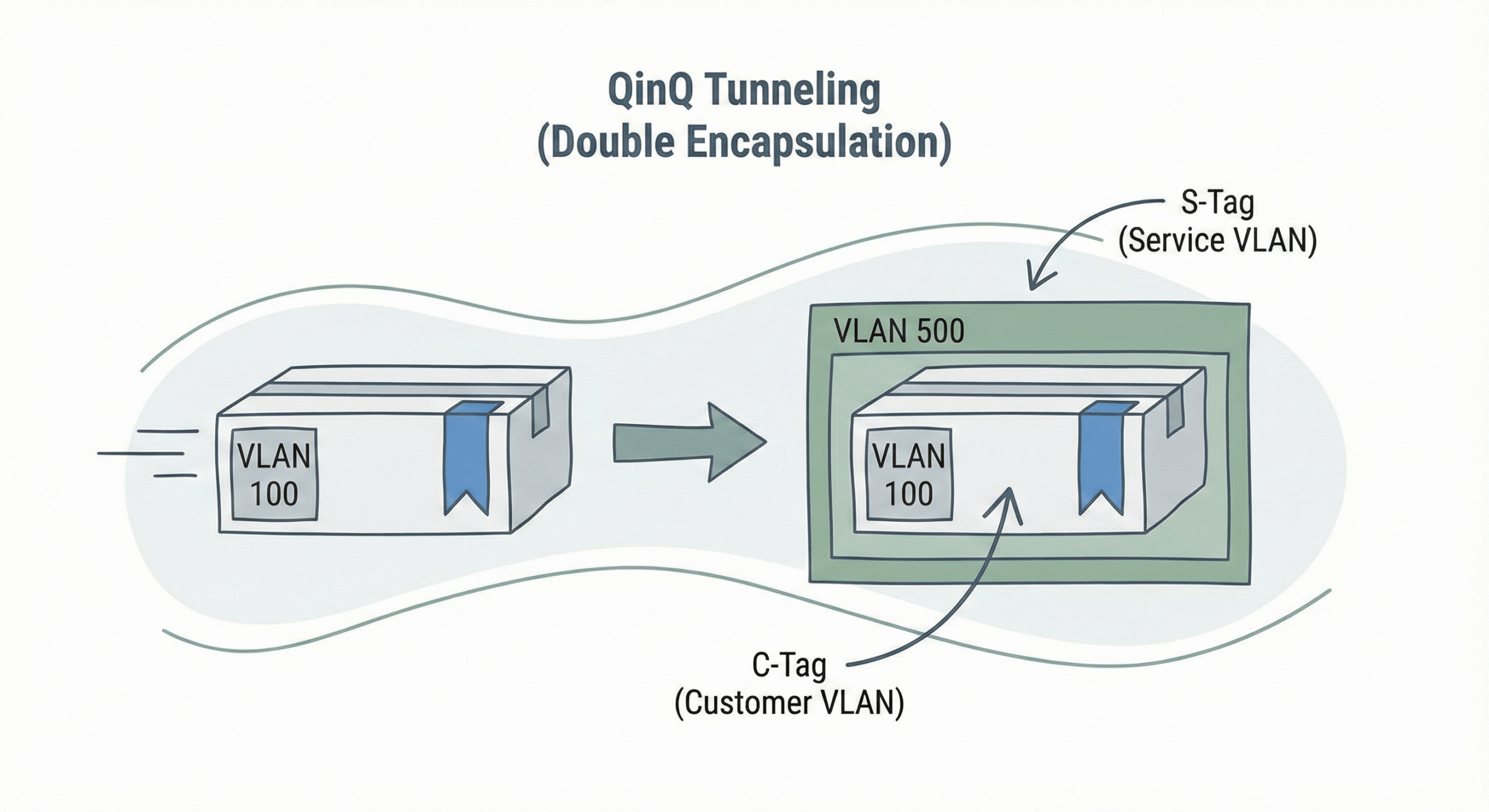

When I first wrote about QinQ tunneling (or IEEE 802.1ad, formally known as Provider Bridging) back in 2011, it was an absolutely foundational technology for service providers. Fast forward to today, and while the network landscape has evolved dramatically with SDN, EVPN/VXLAN, Segment Routing, and pervasive network automation, QinQ remains a vital, widely deployed mechanism. It continues to be the method of choice for many service providers to preserve customer VLAN IDs and to segregate traffic effectively, particularly in carrier Ethernet deployments and multi-tenant data center interconnect scenarios. As a quick orientation: QinQ adds an outer service tag (S‑tag) around a customer’s 802.1Q tag (C‑tag), requires careful MTU planning for double-tagged frames, and typically filters customer L2 control protocols unless explicitly tunneled.

At its core, QinQ allows a service provider to encapsulate a customer’s 802.1Q tagged Ethernet frame with an additional 802.1Q tag, often called the S-tag (Service Provider Tag) or Metro Ethernet Tag. The original customer tag is then referred to as the C-tag (Customer Tag). This double-tagging enables providers to use a single VLAN in their backbone network to support numerous customers, each potentially using their own range of VLANs, all while maintaining their distinct traffic separation. For me, it always brings back memories of working with MPLS labels – the concept of imposition and disposition helps explain the tagging and stripping process quite nicely.

Let’s revisit some fundamental aspects of configuring and understanding QinQ, alongside crucial updates reflecting modern networking practices, especially regarding automation, multi-vendor environments, and observability.

Key Principles of QinQ Tunneling

When dealing with QinQ, several foundational concepts remain critical:

- Tunnel Port Definition: A port facing the customer device must be defined as a “tunnel port” or equivalent. This port is assigned a specific provider VLAN (S-tag) that identifies the customer’s service within the provider’s network.

- Traffic Segregation: Each customer is typically assigned to a unique tunnel port (or a logical equivalent) that maps to a distinct provider VLAN. This ensures their traffic remains completely separate and isolated within the provider’s domain.

- Encapsulation and Decapsulation: When a tunnel port receives customer traffic (which can be untagged or already 802.1Q tagged), it adds a 4-byte S-tag. In 802.1ad (QinQ), the outer Service tag (S‑tag) uses TPID

0x88A8, while the inner Customer tag (C‑tag) uses TPID0x8100. Some vendors can be configured to accept alternate TPIDs for interoperability. The frame then traverses the provider network. At the egress tunnel port, this S-tag is stripped off before the traffic is transmitted to the remote customer device. - MTU Adjustment: This is a classic “gotcha”! A single 802.1Q tag increases the on‑the‑wire Ethernet frame size to roughly 1522 bytes; double tagging (QinQ) makes it about 1526 bytes. To preserve a 1500‑byte IP payload, ensure device MTU knobs account for +4 bytes per tag along the path. On Cisco Catalyst 9K (IOS XE), for example, configure an MTU that adds +4 bytes per tag (e.g., 1504 for two tags), or standardize on a larger jumbo MTU (e.g., 9214/9216) across the path to accommodate overlays without fragmentation.

Layer 2 Protocol Tunneling (L2PT)

In a QinQ environment, Layer 2 Protocol Data Units (PDUs) like CDP, STP, and LLDP from the customer network are not automatically propagated across the provider’s tunnel. This is by design, to prevent customer L2 protocols from interfering with the provider’s network, which could cause loops or unintended topology changes. However, customers often need these protocols to function end-to-end for their own network management, discovery, or redundancy mechanisms.

To enable this, L2PT must be explicitly configured. This encapsulates the customer’s L2 PDUs within the provider’s network, allowing them to traverse the tunnel transparently. The default tunneled PDU destination MAC is 01:00:0C:CD:CD:D0 (configurable on some vendors). This ensures the PDU is treated as data within the provider network and not processed by the provider’s own L2 control plane.

While still relevant, it’s worth noting that VTP is less common and generally discouraged in modern service provider contexts due to its potential for network-wide VLAN changes and instability. Best practice is often to block VTP unless absolutely necessary and thoroughly controlled. Modern VLAN management typically favors more controlled, static, or automation-driven provisioning.

Traditional QinQ Scenario

The core scenario for QinQ hasn’t changed much, but our approach to deploying and managing it certainly has. Let’s look at a classic example:

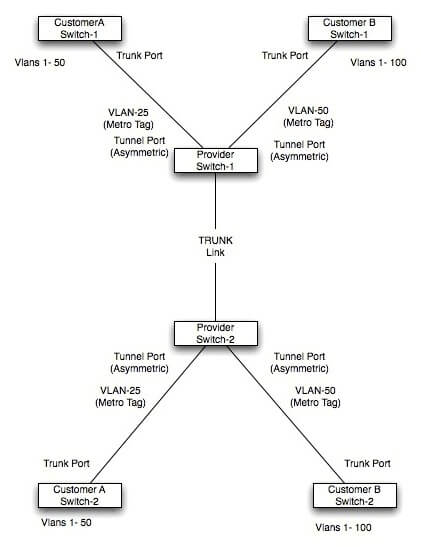

In this diagram, Customer A sends VLANs 1-50 over their metro Ethernet link. Similarly, Customer B sends VLANs 1-100. The provider’s network effectively tunnels these customer VLANs while keeping Customer A’s and Customer B’s traffic completely isolated using different provider S-tags (e.g., VLAN 25 for Customer A, VLAN 50 for Customer B). The Metro Ethernet Tag is the provider’s S-tag.

A key observation here is the use of asymmetric ports: the customer-facing ports on the provider switch are configured as tunnel ports (which internally treat incoming traffic as untagged or add the S-tag), while the customer switches are configured with standard 802.1Q trunk ports. The provider’s internal network typically uses standard 802.1Q trunks for interconnecting provider switches, carrying the S-tagged traffic.

A critical best practice involves native VLANs on provider trunk links. These should never overlap with any customer VLANs to prevent accidental double-tagging issues or traffic leakage. A common and highly recommended solution is to explicitly tag the native VLAN on provider-internal trunks using commands like vlan dot1q tag native on Cisco platforms, or equivalent configurations on other vendors. This ensures all traffic traversing the provider’s backbone is explicitly tagged, enhancing security, preventing misconfigurations, and improving transparency.

Essential Considerations for Provider Tunnel Ports (Updated)

When configuring QinQ tunnel ports on provider edge switches, always keep these points in mind:

- No Direct Routing: Tunnel ports are designed for Layer 2 transparency. You cannot directly route traffic on a tunnel port itself. If Switched Virtual Interfaces (SVIs) or Bridge Domain Interfaces (BDIs) are configured for the provider VLAN (S-tag), only untagged frames (or frames where the S-tag is the native VLAN) will be routed by the SVI. This reinforces the Layer 2 nature of QinQ services; routing occurs after decapsulation or at a higher layer in the provider network.

- L2 Protocol Default Behavior: When a port is configured as an IEEE 802.1Q tunnel port, certain Layer 2 protocols are often automatically filtered, rewritten, or disabled by default to maintain network isolation and prevent loops. For instance, on Cisco IOS XE devices, Spanning-Tree Protocol (STP) Bridge Protocol Data Unit (BPDU) filtering is typically enabled, and Cisco Discovery Protocol (CDP) and Link Layer Discovery Protocol (LLDP) are automatically disabled or their PDUs are encapsulated. As mentioned, if you need these customer protocols to pass, you must explicitly enable tunneling/forwarding per vendor behavior.

- QoS Limitations: Layer 3 Quality of Service (QoS) ACLs and other QoS features relying on Layer 3 information are generally not supported directly on tunnel ports, as the port operates at Layer 2 (before the S-tag is stripped). However, MAC-based QoS, CoS (Class of Service) marking based on original C-tag, or remarking of the S-tag CoS field based on customer input are often supported, allowing you to prioritize traffic based on customer MAC addresses or their ingress CoS markings.

- Link Aggregation Support: On Cisco IOS XE (Cat9K), L2PT natively supports CDP, STP, VTP and LLDP; PAgP/LACP/UDLD tunneling is intended for emulated point‑to‑point topologies and must not be broadly flooded. Validate platform behavior before enabling control protocol tunneling on aggregation links.

- Security Policy Enforcement: While QinQ provides isolation, granular security policies (e.g., port security, ingress/egress ACLs based on MAC addresses, DHCP snooping) are still critical at the customer-facing tunnel port. These features help prevent rogue devices, MAC flooding, and other Layer 2 attacks from impacting other customers or the provider network.

Q-in-Q Tunneling Configuration Examples (Multi-Vendor & Automated)

Let’s look at a simple scenario and see how we can configure it, first with traditional CLI, and then with modern automation tools.

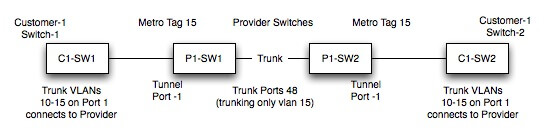

We’ll configure C1-SW1 and C1-SW2 as customer switches, and P1-SW1 and P1-SW2 as provider edge switches.

Cisco IOS XE Configuration

This configuration reflects modern Cisco IOS XE syntax. Note the essential system mtu command, which often requires a reload, a critical step to remember. On platforms where 802.1Q is the only encapsulation (e.g., many Catalyst 9K models), the command switchport trunk encapsulation dot1q is not supported and should be omitted.

| Description | C1-SW1 | C1-SW2 |

| Configuration of Customer Ports Connecting to Provider Edge Switches |

|

|

| Customer VLANs | VLANs 10,11,12,13,14,15,16 | VLANs 10,11,12,13,14,15,16 |

| Customer SVIs (Example for reachability) |

|

|

| P1-SW1 (Provider Edge) | P1-SW2 (Provider Edge) | |

| Trunk Ports between Provider Switches (e.g., to Provider Core) |

|

|

| Provider S-tag on Trunk | VLAN 15 (Provider S-tag) | VLAN 15 (Provider S-tag) |

| Q-in-Q and L2TP configuration of Provider Edge switches |

|

|

Multi-Vendor QinQ: Juniper Junos OS & Arista EOS

As network engineers, we rarely work in a purely single-vendor environment. Understanding how different vendors implement the same concept is crucial for building robust, multi-vendor solutions. For QinQ, the principles are the same, but the syntax differs.

Juniper Junos OS (Provider Edge)

Juniper typically uses flexible-vlan-tagging and VLAN maps on the interface, with QinQ applied via input/output VLAN maps and the interface tied to a bridge domain or EVPN instance. L2 protocol tunneling is enabled using protocols layer2-control mac-rewrite and per‑protocol statements or VLAN-level L2PT controls. The default tunnel destination MAC is 01:00:0C:CD:CD:D0.

# On P1-SW1 (Juniper MX/QFX - Junos OS 23.x)

set interfaces ge-0/0/1 description "To-Cust1"

set interfaces ge-0/0/1 flexible-vlan-tagging

set interfaces ge-0/0/1 encapsulation vlan-bridge

set interfaces ge-0/0/1 unit 0 encapsulation vlan-bridge

set interfaces ge-0/0/1 unit 0 input-vlan-map push

set interfaces ge-0/0/1 unit 0 vlan-id 15

set interfaces ge-0/0/1 mtu 1504

# L2 protocol tunneling (examples) – enable as required

set protocols layer2-control mac-rewrite interface ge-0/0/1.0 protocol stp

set protocols layer2-control mac-rewrite interface ge-0/0/1.0 protocol lldp

# Bridge domain tying it together

set bridge-domains BD_Cust1 description "Customer 1 QinQ Service"

set bridge-domains BD_Cust1 domain-type bridge

set bridge-domains BD_Cust1 vlan-id 15

set bridge-domains BD_Cust1 interface ge-0/0/1.0

# Verification (examples):

# show ethernet-switching layer2-protocol-tunneling

# show bridge mac-table interface ge-0/0/1.0

Juniper handles L2PT by rewriting destination MACs for specified protocols at the interface or VLAN level; combine this with QinQ tagging to carry customer control PDUs transparently.

Arista EOS (Provider Edge)

Arista’s EOS is quite close to Cisco’s IOS XE in how it handles dot1q tunneling, but L2 control protocol handling is configured via L2 Protocol Forwarding (L2PF), not Cisco-style L2PT commands. Use L2PF to pass or drop LLDP/STP/LACP as required.

# On P1-SW1 (Arista EOS 4.32.x)

interface Ethernet1

description To-Cust1

switchport mode dot1q-tunnel

switchport dot1q-tunnel vlan 15

mtu 1504

# Configure L2 Protocol Forwarding policies per protocol as needed (LLDP/STP/LACP)

# Example verification:

# show dot1q-tunnel

# show l2-protocol forwarding interface Ethernet1 detail

# Consider system-wide jumbo MTU if overlays are used:

# system jumbo mtu 9214

Automating QinQ Deployment with Python and Ansible

This is where modern network engineering truly shines! Manually configuring QinQ across multiple provider edge devices is time-consuming, prone to human error, and difficult to scale. Infrastructure as Code (IaC), GitOps, and network automation frameworks like Ansible, Nornir, and Scrapli are essential tools today for deploying and managing complex network services like QinQ.

I’ve broken enough configurations to know that automation isn’t just a luxury; it’s a necessity for reliability, speed, and maintaining consistent configurations across a sprawling network.

Python with Nornir & Netmiko (Python 3.10+)

Nornir (3.5+) is a Python automation framework that works well with network drivers like Netmiko (4.5+) or Scrapli to execute commands across multiple devices. Here’s a simplified Python script example for configuring a QinQ tunnel port on a Cisco IOS XE device:

# qinq_deploy.py

from nornir import InitNornir

from nornir_utils.plugins.functions import print_result

from nornir_netmiko.tasks import netmiko_send_config

import logging

# Suppress Netmiko/Paramiko log spam

logging.getLogger("paramiko").setLevel(logging.WARNING)

logging.getLogger("nornir_netmiko").setLevel(logging.WARNING)

def configure_qinq(task):

"""Configures QinQ on a Cisco IOS XE device."""

if "ios" in task.host.platform:

config_commands = [

f"interface {task.host['interface']}",

f"description {task.host['description']}",

f"switchport access vlan {task.host['provider_vlan']}",

"switchport mode dot1q-tunnel",

"l2protocol-tunnel cdp",

"l2protocol-tunnel stp",

"l2protocol-tunnel lldp",

"no cdp enable",

"no lldp transmit",

"no lldp receive"

]

mtu_command = "system mtu 1504"

# Apply MTU separately; note that it requires a reload on many platforms

task.run(task=netmiko_send_config, commands=[mtu_command], name="Ensure System MTU")

task.run(task=netmiko_send_config, commands=config_commands, name="Configure QinQ Interface")

else:

raise RuntimeError(f"Skipping {task.host.name}: Not an IOS platform for this task.")

if __name__ == "__main__":

nr = InitNornir(config_file="config.yaml")

cisco_pes = nr.filter(platform="ios")

print("--- Configuring QinQ on Cisco PEs ---")

result = cisco_pes.run(task=configure_qinq)

print_result(result)

And a sample hosts.yaml (part of Nornir’s inventory, typically alongside config.yaml):

# hosts.yaml

P1-SW1:

hostname: 192.168.1.10

platform: ios

username: admin

password: "{{ vault_cisco_password }}"

data:

interface: GigabitEthernet0/1

description: To-Cust1

provider_vlan: 15

P1-SW2:

hostname: 192.168.1.11

platform: ios

username: admin

password: "{{ vault_cisco_password }}"

data:

interface: GigabitEthernet0/1

description: To-Cust1

provider_vlan: 15

Ansible Playbook (Ansible 13.x)

Ansible is excellent for multi-vendor orchestration, leveraging platform-specific modules (Ansible Collections). Here’s an example playbook to configure QinQ on Cisco, Juniper, and Arista devices:

# qinq_deploy_playbook.yaml

---

- name: Configure QinQ Tunnel Ports on Provider Edge Switches

hosts: provider_edge_switches

gather_facts: no

connection: network_cli

vars:

provider_vlan: 15

customer_interface: GigabitEthernet0/1

tasks:

- name: Configure Cisco IOS XE QinQ tunnel port

cisco.ios.ios_config:

lines:

- "interface {{ customer_interface }}"

- "description To-Cust1"

- "switchport access vlan {{ provider_vlan }}"

- "switchport mode dot1q-tunnel"

- "l2protocol-tunnel cdp"

- "l2protocol-tunnel stp"

- "l2protocol-tunnel lldp"

- "no cdp enable"

- "no lldp transmit"

- "no lldp receive"

parents: "interface {{ customer_interface }}"

when: ansible_network_os == 'ios'

tags: cisco_qinq

- name: Ensure Cisco system MTU is set (requires reload on some platforms)

cisco.ios.ios_config:

lines:

- "system mtu 1504"

when: ansible_network_os == 'ios'

tags: cisco_mtu

- name: Configure Juniper Junos QinQ tunnel port

junipernetworks.junos.junos_config:

lines:

- "set interfaces {{ customer_interface }} description "To-Cust1""

- "set interfaces {{ customer_interface }} flexible-vlan-tagging"

- "set interfaces {{ customer_interface }} encapsulation vlan-bridge"

- "set interfaces {{ customer_interface }} unit 0 encapsulation vlan-bridge"

- "set interfaces {{ customer_interface }} unit 0 input-vlan-map push"

- "set interfaces {{ customer_interface }} unit 0 vlan-id {{ provider_vlan }}"

- "set protocols layer2-control mac-rewrite interface {{ customer_interface }}.0 protocol stp"

- "set protocols layer2-control mac-rewrite interface {{ customer_interface }}.0 protocol lldp"

when: ansible_network_os == 'junos'

tags: juniper_qinq

- name: Configure Arista EOS QinQ tunnel port

arista.eos.eos_config:

lines:

- "interface {{ customer_interface }}"

- "description To-Cust1"

- "switchport mode dot1q-tunnel"

- "switchport dot1q-tunnel vlan {{ provider_vlan }}"

- "mtu 1504"

- "! Configure L2 Protocol Forwarding (LLDP/STP/LACP) using EOS L2PF policies as required"

parents: "interface {{ customer_interface }}"

when: ansible_network_os == 'eos'

tags: arista_qinq

- name: Ensure Arista system jumbo MTU is set (if required globally)

arista.eos.eos_config:

lines:

- "system jumbo mtu 9214"

when: ansible_network_os == 'eos'

tags: arista_mtu

This playbook assumes you have an Ansible inventory file where you’ve defined a group provider_edge_switches and set ansible_network_os for each device (e.g., ios, junos, eos). Running this playbook would apply the correct QinQ configuration based on the device’s operating system, ensuring consistent deployment across your multi-vendor environment.

Beyond QinQ: Observability, SDN, and the Future

While QinQ remains critical, its management and monitoring have advanced significantly, integrating with modern network paradigms:

- Observability: Modern networks leverage streaming telemetry (via gNMI or NETCONF/RESTCONF with OpenConfig models) to gain real-time, high-fidelity insights into QinQ interfaces, traffic counters, error rates, and overall service health. This allows for proactive monitoring, faster anomaly detection, and quicker troubleshooting compared to traditional SNMP polling. Tools like Prometheus and Grafana can consume this data to provide rich dashboards and alerts, helping to maintain strict SLAs for QinQ services.

- SDN and Intent-Based Networking: Controllers like Cisco NSO, Juniper Apstra, or other Intent-Based Networking (IBN) platforms can abstract the underlying QinQ configurations. Instead of manual CLI commands, network engineers define the desired “intent” for customer connectivity. The controller translates this intent into vendor-specific CLI or API calls, ensuring consistency, compliance, and automated validation across the multi-vendor network.

- Integration with Overlays: In many modern service provider or data center interconnect scenarios, QinQ may serve as the foundational underlay for advanced EVPN/VXLAN or Segment Routing (SR) overlays. QinQ provides the robust Layer 2 transport over the physical infrastructure, while the overlay offers agile, virtualized network services for tenant isolation and mobility.

- Security and Zero Trust: Modern network architectures increasingly embed Zero Trust principles. While QinQ isolates customer traffic, integrating it with network access control (NAC) and security policy enforcement points ensures that only authorized devices can connect to the QinQ tunnel port.

- GitOps Workflows: Network configurations, including QinQ deployments, are increasingly managed through GitOps. This provides an audit trail, enables automated testing of configuration changes, and ensures that the network’s actual state converges with the desired state defined in Git.

Best Practices for Robust QinQ Deployments

To wrap things up, here are some enduring best practices I live by, now updated to reflect the modern networking landscape:

- MTU, MTU, MTU: Seriously, verify your MTU settings across the entire QinQ path, including all transit devices. Account for the extra bytes added by tags and consider setting jumbo frames (e.g., 9214 or 9216 bytes) across your backbone if you plan for future overlays or larger payloads.

- Native VLAN Discipline: Ensure provider native VLANs are explicitly tagged on all internal trunks and do not overlap with any customer VLANs. This prevents accidental traffic leakage and simplifies troubleshooting.

- Strategic L2PT: Only enable L2PT (or vendor‑specific L2 protocol forwarding) for customer protocols that are truly necessary end-to-end (e.g., STP, LLDP). Avoid enabling VTP tunneling unless absolutely unavoidable in legacy scenarios.

- Automation First: Embrace tools like Ansible, Nornir, and Python for configuration, verification, and day-2 operations. Implement Infrastructure as Code (IaC) and GitOps workflows to reduce human error and provide an auditable history of changes.

- Clear Documentation: Document your S-tag assignments, customer mappings, port configurations, and any specific L2PT or QoS policies thoroughly.

- Phased Rollouts and Validation: Never deploy changes network-wide without thorough testing. Use lab environments for validation and run automated pre-/post-checks to verify service health.

- Enhanced Observability: Implement streaming telemetry (gNMI/OpenConfig) and integrate with monitoring platforms to gain real-time insights into QinQ service performance.

- Security at the Edge: Apply granular security policies (e.g., port security, MAC-based ACLs, DHCP snooping) on customer-facing tunnel ports to protect against Layer 2 attacks and ensure tenant isolation.

QinQ tunneling, despite its age, continues to be a workhorse in service provider networks, offering robust Layer 2 segregation. By understanding its core principles and adapting to modern automation, observability, multi-vendor approaches, and security considerations, we can deploy and manage these services efficiently and reliably. The evolution of our tools and methodologies ensures that even foundational technologies like QinQ remain powerful components in today’s dynamic networking landscape.

I hope this comprehensive update provides a clearer, more modern perspective on QinQ tunneling. Feel free to share your thoughts or experiences in the comments below!

- Telecom Network Infrastructure: Complete Guide to Components & Design - January 6, 2026

- TP-Link TL-SG108E vs Netgear GS308E: Budget Smart Switches - January 5, 2026

- MikroTik CRS305-1G-4S+ Review: The Ultimate Budget SFP+ Switch Guide - December 25, 2025