How to Extend An Ethernet Cable for Faster Internet and Stable Connections

In this comprehensive guide, we'll walk you through the process of how to extend an ethernet cable without losing speed.

In this comprehensive guide, we'll walk you through the process of how to extend an ethernet cable without losing speed.

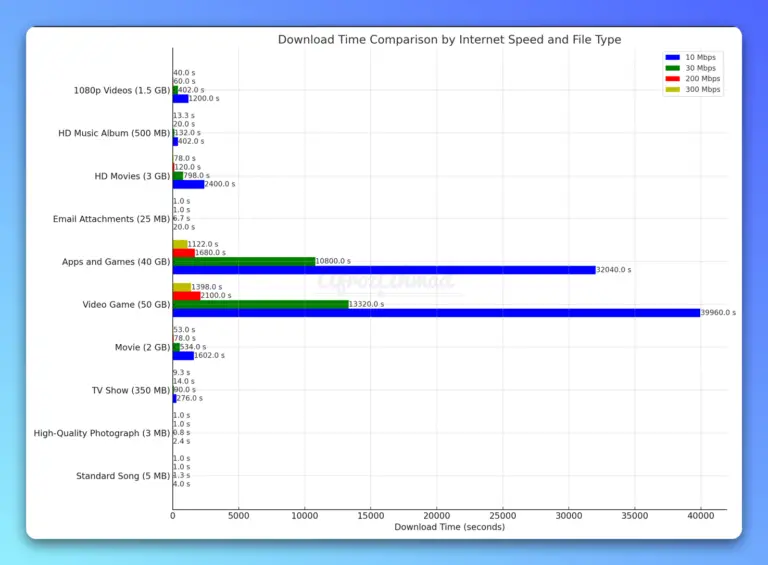

This article provides practical insights on the ideal internet speed of 30 Mbps for gaming, streaming, and working from home in 2023.

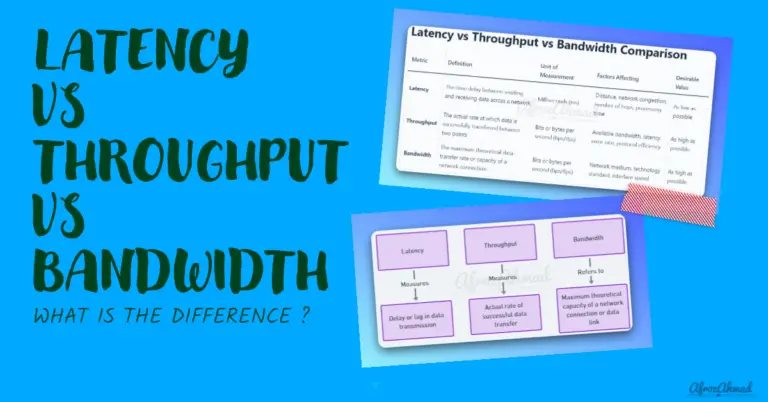

Learn how latency, throughput, and bandwidth differ in computer networking. Compare definitions, metrics, factors, and optimization of these key concepts for managing network performance.

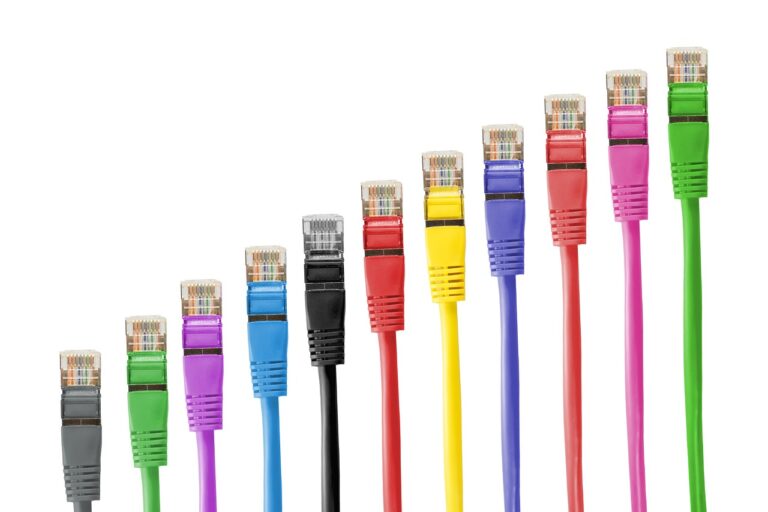

In this article, we will provide a comprehensive comparison between Cat5 vs Cat5e vs Cat6 vs Cat 6a vs Cat7 vs Cat8 Ethernet cables, to aid you in making informed decisions about your connectivity needs.

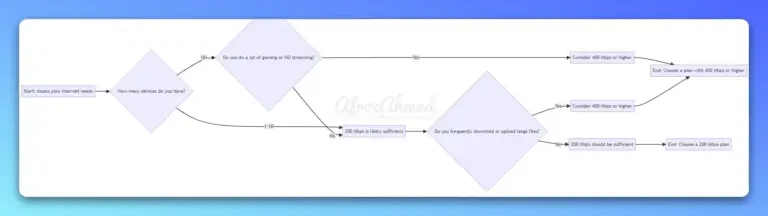

Is 200 mbps fast enough for your needs? This guide will help you find out.

Want to know the difference between MTU vs MSS? Read on to know.

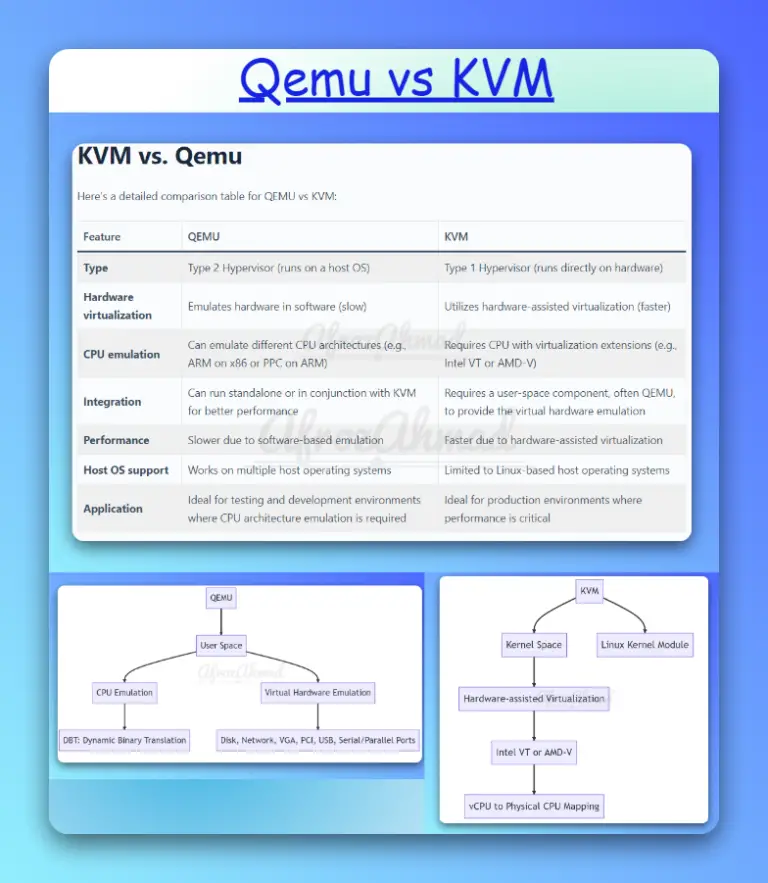

This article aims to provide a clear understanding of the similarities and differences between Qemu vs KVM.

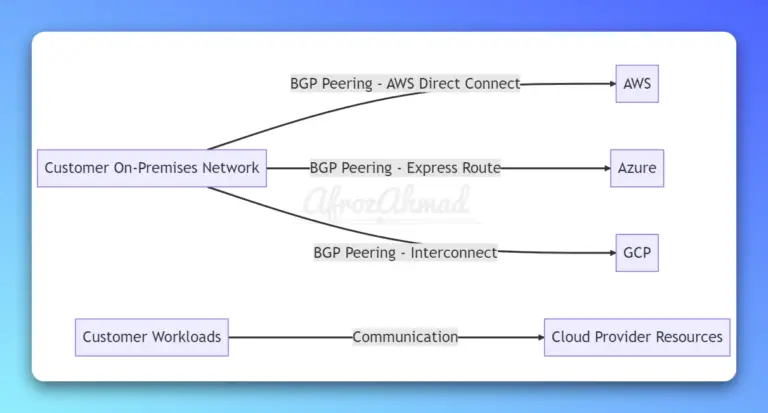

Discover how to use BGP in cloud Networking, covering interconnecting cloud networks to customers' on-premise network, connecting regions, internet routing, route aggregation, and ensuring high availability.

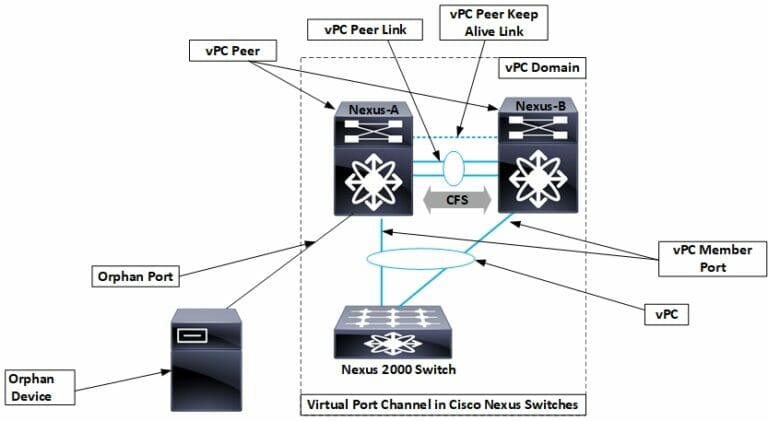

What is Cisco vPC and its brief History What is Cisco vPC (virtual Port Channel), and how has normal port-channel evolved to this point? Let us understand port-channel first; it is the simplest and oldest technology that combines two or more…

What if I say that there is one simple windows10 feature to turn off blue light, which is turned off by default and can help you sleep better, especially if you work at night on your laptop. One of the…