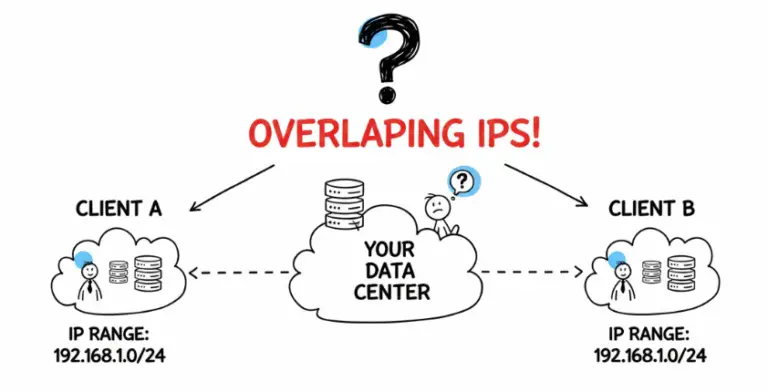

Virtualization technologies, like QEMU and KVM, are both utilized to run VNFs in OpenStack or experiment with virtual environments in GNS3 or EVE-NG. However, understanding the key differences between QEMU vs. KVM is essential.

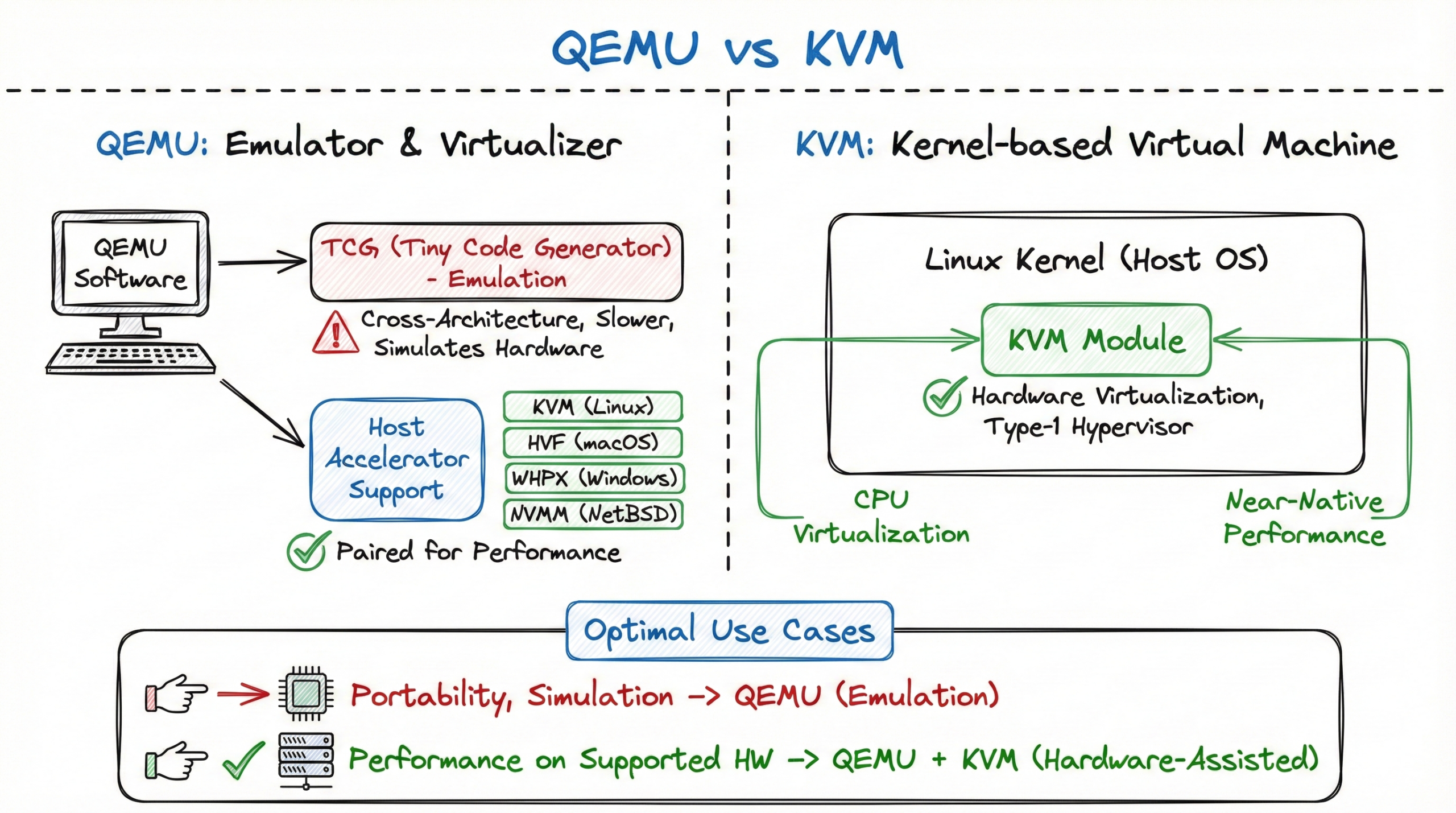

In Short: QEMU is an open‑source machine emulator and virtualizer. It can fully emulate CPUs and devices using its Tiny Code Generator (TCG) for cross‑architecture testing, or run as a hosted hypervisor when paired with hardware accelerators. On Linux, QEMU commonly uses KVM to achieve near‑native performance; on macOS and Windows, it can use HVF and WHPX respectively (and NVMM on NetBSD). KVM (Kernel‑based Virtual Machine) is a Linux kernel module that exposes hardware virtualization, turning Linux into a type‑1 hypervisor. In typical setups, QEMU provides the user‑space virtual hardware while KVM handles CPU virtualization for production‑grade performance.

While both serve as powerful tools for virtualization, their optimal use cases differ. If you need portability across CPU architectures or want to simulate hardware, QEMU’s emulation is ideal. If you need performance on supported hardware, pair QEMU with KVM (or host-specific accelerators) to leverage hardware-assisted virtualization.

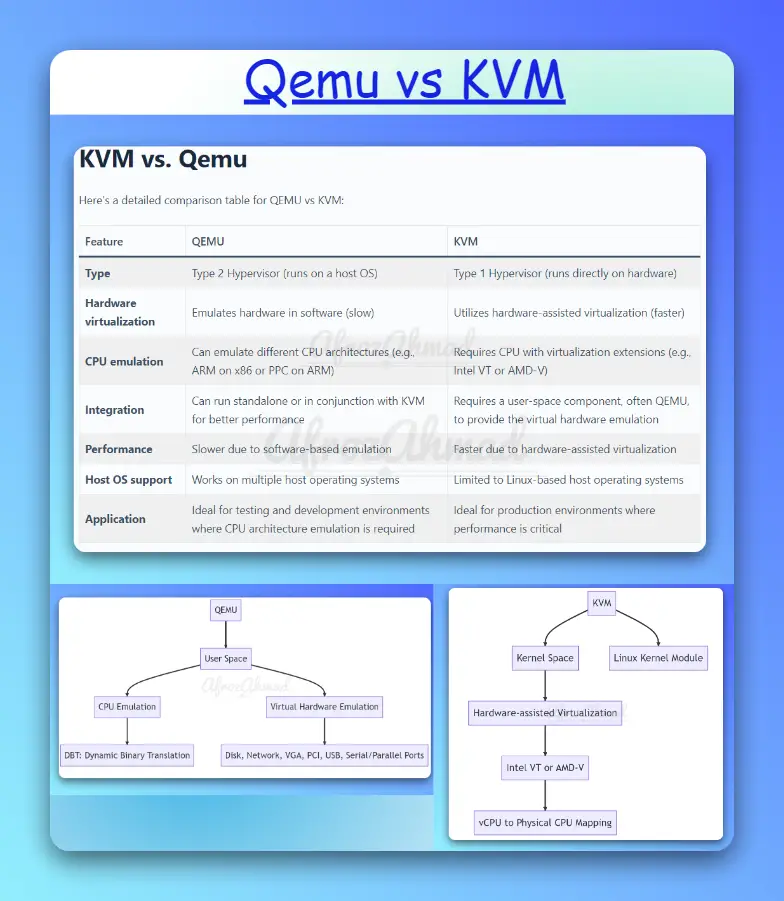

KVM vs. QEMU

Here’s a detailed comparison table for QEMU vs KVM:

| Feature | QEMU | KVM |

|---|---|---|

| Type / Role | Machine emulator & virtualizer (user space). Can run pure emulation (TCG) or use accelerators; often supplies device models and I/O (virtio). | Linux kernel module that exposes hardware virtualization; turns Linux into a type‑1 hypervisor and works with a user-space VMM (commonly QEMU). |

| Hardware virtualization | Software emulation via TCG (slower) or hardware-assisted via accelerators (fast). | Provides hardware-assisted virtualization (Intel VT‑x/AMD‑V, ARM virtualization, etc.). |

| CPU architecture support | Can emulate many architectures (e.g., ARM on x86, PPC on ARM). | Supports multiple host architectures on Linux (x86, ARM, IBM Z/s390x, POWER) with the right CPU extensions. |

| Integration | Runs standalone for emulation or with accelerators: KVM (Linux), HVF (macOS), WHPX (Windows), NVMM (NetBSD). | Kernel component; requires a user-space component (commonly QEMU) for VM lifecycle and device models. |

| Performance | TCG is slower; with accelerators, performance approaches native depending on workload and features (virtio, passthrough). | Near‑native performance for many workloads with proper configuration (CPU passthrough, virtio). |

| Host OS support | Runs on Linux, macOS, Windows, BSDs. Accelerator availability varies by OS. | Native to Linux hosts. Experimental/porting efforts exist elsewhere but are uncommon in production. |

| Application | Great for cross‑arch testing, CI, education, network labs (GNS3/EVE‑NG), and developer workflows on macOS/Windows using HVF/WHPX. | Ideal for production virtualization stacks (libvirt/virt-manager, Proxmox, oVirt/OpenShift/OpenStack) where performance and density matter. |

Please note that QEMU and KVM are often used together: QEMU provides the virtual hardware and device models, while KVM provides the hardware-assisted virtualization path, significantly improving performance compared to pure software emulation.

Understanding Virtualization: The Basics

Before we dive into the intricacies of QEMU vs. KVM, it’s essential to understand the basics of virtualization. Virtualization creates software-defined representations of compute, storage, and networking that can be managed and allocated efficiently. Multiple virtual machines (VMs) can share a single physical host safely, enabling better utilization, isolation, and lifecycle management.

Make sure you read the below posts to grasp more knowledge on virtualization.

Hypervisors: The Heart of Virtualization

Hypervisors enable the creation and management of virtual machines. There are two broad categories:

- Type 1 (Bare Metal): Runs directly on the host’s hardware, offering strong isolation and performance. KVM effectively makes the Linux kernel operate as a type‑1 hypervisor.

- Type 2 (Hosted): Runs on top of a host operating system. QEMU can act in this role when providing user-space virtualization, and it can also use accelerators to achieve hardware-assisted execution.

With this foundational knowledge, let’s explore QEMU and KVM in detail.

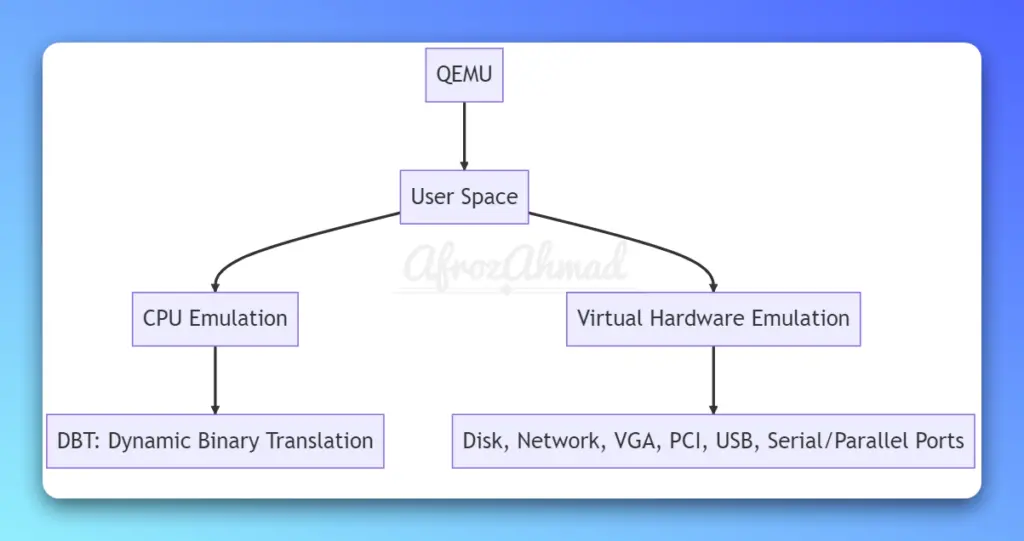

QEMU: A Flexible Emulator and Virtualizer

QEMU, the Quick Emulator, runs in user space and provides two primary modes: pure emulation using TCG (ideal for cross‑architecture work) and accelerated virtualization when paired with host-specific accelerators. It offers an extensive array of virtual hardware components, including disk, network, VGA, PCI, USB, serial/parallel ports, and virtio paravirtualized devices for high performance with accelerators.

One of QEMU’s biggest advantages is its ability to emulate different CPU architectures using dynamic binary translation. This lets you run, for example, ARM software on x86 hardware for testing and CI. When acceleration is available, QEMU can switch critical execution paths to hardware-assisted mode for a major speedup.

Modern QEMU releases support multiple accelerators across platforms: KVM on Linux, HVF on macOS, WHPX on Windows, and NVMM on NetBSD. TCG remains the software fallback when hardware acceleration is unavailable.

This diagram represents the QEMU virtualization technology. QEMU operates in user space and provides CPU emulation through dynamic binary translation (DBT). With accelerators enabled, QEMU can execute guest code using hardware virtualization while still emulating or paravirtualizing devices as needed.

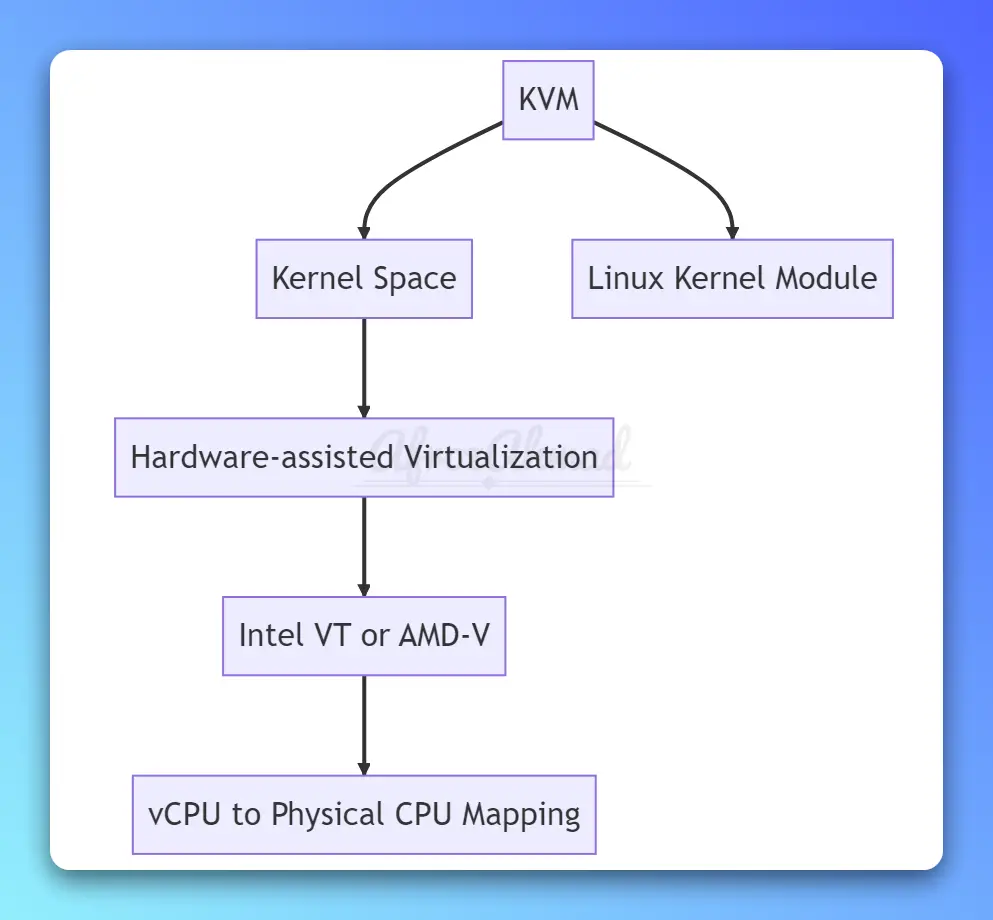

KVM: A High-Performance Linux Hypervisor Module

KVM or Kernel-based Virtual Machine is integrated into the Linux kernel as a module and enables hardware-assisted virtualization on supported CPUs. KVM is not limited to x86; it also supports architectures such as ARM, IBM Z (s390x), and POWER on Linux when the processor provides virtualization extensions.

With hardware-assisted virtualization, guest kernels run directly on the CPU in a special mode (such as VMX non‑root on Intel VT‑x). Privileged operations trigger VM exits to the hypervisor, which handles them and resumes the guest. This trap‑and‑emulate design avoids the heavy binary translation techniques used before modern CPU extensions and delivers near‑native performance for many workloads.

Modern processors with virtualization extensions, like Intel VT‑x and AMD‑V, allow efficient execution of guest code while isolating it from the host. Combined with virtio paravirtualized drivers and features such as VFIO/PCI passthrough for direct device access, KVM-based stacks can achieve excellent I/O performance.

This diagram represents the KVM virtualization technology. KVM operates as a Linux kernel module within kernel space, providing hardware-assisted virtualization using supported CPU extensions. A user-space VMM such as QEMU manages the VM lifecycle and provides the virtual devices and I/O paths.

The Synergy between QEMU and KVM

Although QEMU can function independently, its software emulation is slower than hardware-assisted execution. To address this, QEMU can utilize KVM as an accelerator on Linux, and equivalent accelerators on other hosts (HVF on macOS, WHPX on Windows, NVMM on NetBSD). Together, QEMU provides the device models and management while KVM (or the host accelerator) executes most guest code directly on the CPU.

A Historical Perspective

The relationship between QEMU and KVM often leads to confusion. KVM was merged into the mainline Linux kernel in version 2.6.20 as a kernel virtualization module. A separate user-space fork called qemu‑kvm existed to integrate KVM support with QEMU; that fork was later merged upstream into QEMU (from version 1.3), so current QEMU includes KVM support without a separate fork.

Making the Right Choice: QEMU, KVM, or Both?

When selecting a virtualization solution, it’s crucial to consider the specific requirements of your organization. Here are some factors to keep in mind:

- Performance: For high performance and consolidation density, pair QEMU with KVM on Linux and use virtio drivers. Consider VFIO/PCI passthrough for latency‑sensitive devices and CPU passthrough for near‑native instruction sets.

- Hardware Support: KVM requires CPU virtualization extensions. If your hardware or host OS lacks acceleration, QEMU’s TCG still provides full emulation at lower speed for testing and cross‑arch builds.

- Operating System: On Linux, QEMU+KVM is the common production stack (libvirt/virt‑manager, Proxmox, oVirt/OpenStack). On macOS and Windows, QEMU can use HVF or WHPX for developer workflows. QEMU also runs on BSDs with NVMM on NetBSD.

In many cases, using QEMU in conjunction with KVM provides the best of both worlds: the flexibility of QEMU’s emulation and device models with the performance of hardware-assisted virtualization.

Conclusion

In summary, QEMU is a machine emulator and virtualizer that runs in user space and can operate in pure emulation mode (TCG) or use accelerators. KVM is a Linux kernel virtualization module that enables hardware-assisted virtualization and, together with a user-space VMM like QEMU, effectively forms a type‑1 hypervisor stack.

Understanding the distinct roles of QEMU and KVM—and how they complement each other—helps you choose the right approach for your workload. Use QEMU alone for portability and cross‑architecture testing, and combine QEMU with KVM (or host‑specific accelerators) when you need production performance.

By leveraging the synergistic relationship between QEMU and KVM, organizations can achieve optimal performance and flexibility in their virtual environments. With a clear understanding of these technologies, you can make well‑informed decisions that meet your specific virtualization needs, whether on Linux servers, developer laptops, or lab environments.

- Telecom Network Infrastructure: Complete Guide to Components & Design - January 6, 2026

- TP-Link TL-SG108E vs Netgear GS308E: Budget Smart Switches - January 5, 2026

- MikroTik CRS305-1G-4S+ Review: The Ultimate Budget SFP+ Switch Guide - December 25, 2025